Comparing Model Performance: Linear Regression, Decision Tree, and Random Forest for Predicting Mating Success

🇨🇦OkCupid Dataset

Book a callOkCupid Dataset

Book a callIn this blog post, we'll compare the performance of three regression models—linear Regression, Decision Tree Regression, and Random Forest Regression—applied to a dating dataset where we predict mating success.

We will assess each model's performance based on Root Mean Squared Error (RMSE) and the results from cross-validation.

Linear Regression is often the go-to model for regression tasks due to its simplicity and efficiency.

./chapter2/data2/train/train1.py

import os

import pandas as pd

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import mean_squared_error

import numpy as np

# Define the path to the dataset

DATING_PATH = "./chapter2/datasets/dating/copies5"

# Function to load the raw data

def load_dating_data(dating_path=DATING_PATH):

csv_path = os.path.join(dating_path, "6_classified_features_needed_label_stratified_train_set.csv")

return pd.read_csv(csv_path)

# Function to load the prepared (cleaned) data

def load_cleaned_dating_data(dating_path=DATING_PATH):

csv_path = os.path.join(dating_path, "6_1_cleaned_dating_data_features_and_labels.csv")

return pd.read_csv(csv_path)

# Load the raw data

dating_data = load_dating_data()

# print(f"Rows after loading raw data: {dating_data.shape[0]}")

# Load the cleaned data (prepared data)

dating_data_prepared = load_cleaned_dating_data()

# print(f"Rows after loading cleaned data: {dating_data_prepared.shape[0]}")

# Separate features and labels

features = dating_data.drop('mating_success', axis=1) # Remove the target variable from features

labels = dating_data['mating_success'] # The target variable

# print(f"Rows after separating features and labels (raw data): {features.shape[0]}, {labels.shape[0]}")

# Separate features and labels for prepared (cleaned) data

features_prepared = dating_data_prepared.drop('mating_success', axis=1) # Remove the target variable from features

# print(f"Rows after separating features and labels (prepared data): {features_prepared.shape[0]}")

# Drop the persistent ID column(s) -- assuming the column name is 'persistent_id' or something similar

features = features.drop(columns=['persistent_id'], errors='ignore') # 'errors=ignore' ensures no error if the column doesn't exist

features_prepared = features_prepared.drop(columns=['persistent_id'], errors='ignore') # 'errors=ignore' ensures no error if the column doesn't exist

# print(f"Rows after dropping 'persistent_id' columns (raw and prepared): {features.shape[0]}, {features_prepared.shape[0]}")

# Initialize the Linear Regression model

lin_reg = LinearRegression()

# Train the model on the entire cleaned and scaled data

lin_reg.fit(features_prepared, labels)

# print(f"Model trained with {features_prepared.shape[0]} rows.")

# Example: Make predictions on the first 5 rows of data

some_data = features.iloc[:5] # First 5 data points

some_data_prepared = features_prepared.iloc[:5] # First 5 data points

some_labels = labels.iloc[:5] # First 5 labels

# Make predictions

predictions = lin_reg.predict(some_data_prepared)

# Output predictions and actual labels

print("Predictions:", predictions)

print("Labels:", list(some_labels))

# Calculate the Mean Squared Error and RMSE for the whole dataset

predictions_all = lin_reg.predict(features_prepared)

mse = mean_squared_error(labels, predictions_all)

rmse = np.sqrt(mse)

print("RMSE:", rmse)

# Predictions: [0.2398392 1.08243193 2.84553505 0.5059264 0.99843327]

# Labels: [0.0, 0.0, 4.0, 0.0, 0.0]

# RMSE: 1.1439084247515616

Linear Regression yielded an RMSE of 1.1439 on the training set, suggesting that it effectively captured the main patterns in the data.

The Decision Tree Regression Model is a powerful model, but it is highly prone to overfitting when trained on the entire dataset without regularization.

./chapter2/data2/train/train3.py

import os

import pandas as pd

from sklearn.tree import DecisionTreeRegressor

from sklearn.metrics import mean_squared_error

import numpy as np

# Define the path to the dataset

DATING_PATH = "./chapter2/datasets/dating/copies5"

# Function to load the raw data

def load_dating_data(dating_path=DATING_PATH):

csv_path = os.path.join(dating_path, "6_classified_features_needed_label_stratified_train_set.csv")

return pd.read_csv(csv_path)

# Function to load the prepared (cleaned) data

def load_cleaned_dating_data(dating_path=DATING_PATH):

csv_path = os.path.join(dating_path, "6_1_cleaned_dating_data_features_and_labels.csv")

return pd.read_csv(csv_path)

# Load the raw data

dating_data = load_dating_data()

# print(f"Rows after loading raw data: {dating_data.shape[0]}")

# Load the cleaned data (prepared data)

dating_data_prepared = load_cleaned_dating_data()

# print(f"Rows after loading cleaned data: {dating_data_prepared.shape[0]}")

# Separate features and labels

features = dating_data.drop('mating_success', axis=1) # Remove the target variable from features

labels = dating_data['mating_success'] # The target variable

# print(f"Rows after separating features and labels (raw data): {features.shape[0]}, {labels.shape[0]}")

# Separate features and labels for prepared (cleaned) data

features_prepared = dating_data_prepared.drop('mating_success', axis=1) # Remove the target variable from features

# print(f"Rows after separating features and labels (prepared data): {features_prepared.shape[0]}")

# Drop the persistent ID column(s) -- assuming the column name is 'persistent_id' or something similar

features = features.drop(columns=['persistent_id'], errors='ignore') # 'errors=ignore' ensures no error if the column doesn't exist

features_prepared = features_prepared.drop(columns=['persistent_id'], errors='ignore') # 'errors=ignore' ensures no error if the column doesn't exist

# print(f"Rows after dropping 'persistent_id' columns (raw and prepared): {features.shape[0]}, {features_prepared.shape[0]}")

# Initialize the Decision Tree Regressor model

tree_reg = DecisionTreeRegressor()

# Train the model on the entire cleaned and scaled data

tree_reg.fit(features_prepared, labels)

# print(f"Model trained with {features_prepared.shape[0]} rows.")

# Example: Make predictions on the first 5 rows of data

some_data_prepared = features_prepared.iloc[:5] # First 5 data points

some_labels = labels.iloc[:5] # First 5 labels

# Make predictions

predictions = tree_reg.predict(some_data_prepared)

# Output predictions and actual labels

print("Predictions:", predictions)

print("Labels:", list(some_labels))

# Calculate the Mean Squared Error and RMSE for the whole dataset

predictions_all = tree_reg.predict(features_prepared)

tree_mse = mean_squared_error(labels, predictions_all)

tree_rmse = np.sqrt(tree_mse)

print("Tree RMSE:", tree_rmse)

# Predictions: [0. 0. 4. 0.5 0. ]

# Labels: [0.0, 0.0, 4.0, 0.0, 0.0]

# Tree RMSE: 0.19546897919468237The Decision Tree produced an RMSE of 0.1955 on the training data, indicating a very tight fit to the training set. However, we suspect overfitting, which is later confirmed during cross-validation.

Cross-validation was used to check the generalization of the models:

./chapter2/data2/train/train4.py

import os

import pandas as pd

from sklearn.tree import DecisionTreeRegressor

from sklearn.linear_model import LinearRegression

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import cross_val_score

import numpy as np

# Define the path to the dataset

DATING_PATH = "./chapter2/datasets/dating/copies5"

# Function to load the raw data

def load_dating_data(dating_path=DATING_PATH):

csv_path = os.path.join(dating_path, "6_classified_features_needed_label_stratified_train_set.csv")

return pd.read_csv(csv_path)

# Function to load the prepared (cleaned) data

def load_cleaned_dating_data(dating_path=DATING_PATH):

csv_path = os.path.join(dating_path, "6_1_cleaned_dating_data_features_and_labels.csv")

return pd.read_csv(csv_path)

# Load the raw data

dating_data = load_dating_data()

# print(f"Rows after loading raw data: {dating_data.shape[0]}")

# Load the cleaned data (prepared data)

dating_data_prepared = load_cleaned_dating_data()

# print(f"Rows after loading cleaned data: {dating_data_prepared.shape[0]}")

# Separate features and labels

features = dating_data.drop('mating_success', axis=1) # Remove the target variable from features

labels = dating_data['mating_success'] # The target variable

# Separate features and labels for prepared (cleaned) data

features_prepared = dating_data_prepared.drop('mating_success', axis=1) # Remove the target variable from features

# Drop the persistent ID column(s) -- assuming the column name is 'persistent_id' or something similar

features = features.drop(columns=['persistent_id'], errors='ignore') # 'errors=ignore' ensures no error if the column doesn't exist

features_prepared = features_prepared.drop(columns=['persistent_id'], errors='ignore') # 'errors=ignore' ensures no error if the column doesn't exist

# Initialize the models

tree_reg = DecisionTreeRegressor()

lin_reg = LinearRegression()

forest_reg = RandomForestRegressor()

# Function to display RMSE scores

def display_scores(scores):

print("Scores:", scores)

print("Mean:", scores.mean())

print("Standard deviation:", scores.std())

print()

# 1. Decision Tree Cross-validation

tree_scores = cross_val_score(tree_reg, features_prepared, labels,

scoring="neg_mean_squared_error", cv=10)

tree_rmse_scores = np.sqrt(-tree_scores)

print("Decision Tree Regressor:")

display_scores(tree_rmse_scores)

# 2. Linear Regression Cross-validation

lin_scores = cross_val_score(lin_reg, features_prepared, labels,

scoring="neg_mean_squared_error", cv=10)

lin_rmse_scores = np.sqrt(-lin_scores)

print("Linear Regression:")

display_scores(lin_rmse_scores)

# 3. Random Forest Regressor Cross-validation

forest_scores = cross_val_score(forest_reg, features_prepared, labels,

scoring="neg_mean_squared_error", cv=10)

forest_rmse_scores = np.sqrt(-forest_scores)

print("Random Forest Regressor:")

display_scores(forest_rmse_scores)

# Decision Tree Regressor:

# Scores: [1.61247778 1.61731719 1.52758062 1.62473912 1.62029613 1.60026824

# 1.72091084 1.56723718 1.61000336 1.65374219]

# Mean: 1.6154572652677406

# Standard deviation: 0.047975024747069335

# Linear Regression:

# Scores: [1.087067 1.20642808 1.14716698 1.14047653 1.17885481 1.19030231

# 1.20752076 1.1213884 1.05076323 1.13498424]

# Mean: 1.1464952332536744

# Standard deviation: 0.048762821595965296

# Random Forest Regressor:

# Scores: [1.1325505 1.27366403 1.19187283 1.17668492 1.20896404 1.23495211

# 1.27242249 1.17953747 1.11942813 1.21552121]

# Mean: 1.2005597735833196

# Standard deviation: 0.049275556706436206We also ran a Random Forest Regressor using cross-validation to assess its performance.

The Random Forest model showed a mean RMSE of 1.2006 and a standard deviation 0.0493. While it performed better than the decision tree, its cross-validation performance could have been better than linear Regression.

In conclusion, linear Regression is the best model overall, with Random Forest being a solid alternative. The decision tree overfitted the data and is only recommended for this problem with additional regularization.

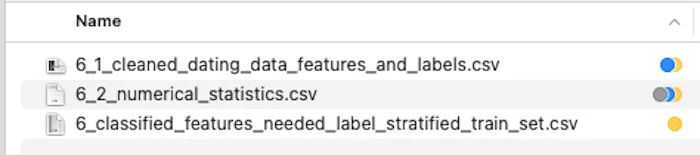

Files produced from code executions: