Data preprocessing is crucial in any data science pipeline, especially when working with real-world datasets that often contain missing values, unscaled features, and categorical variables.

In this blog, we'll describe several steps to clean, scale, and inspect a dating dataset using Python. The dataset includes numerical and categorical features and a target variable to predict.

We'll demonstrate how to handle missing data, perform scaling on numerical columns, and inspect the original and preprocessed data for discrepancies.

We start by loading a dating dataset and separating it into features and labels.

The goal here is to load the data, isolate the features (input variables), and set the target label for prediction.

./chapter2/data2/data_cleaning/c1.py

import os

import pandas as pd

# Define the path to the dataset

DATING_PATH = "./chapter2/datasets/dating/copies4"

def load_dating_data(dating_path=DATING_PATH):

"""

Load the dating data from a CSV file.

"""

csv_path = os.path.join(dating_path, "5_classified_features_needed_label_stratified_train_set.csv")

return pd.read_csv(csv_path)

# Load the training dataset

dating_training_strat = load_dating_data()

# Separate the features (input data) from the label (target variable)

dating_training_features = dating_training_strat.drop("mating_success", axis=1) # Drop the label column to get the features

dating_training_label = dating_training_strat["mating_success"].copy() # Copy the label column

# Ensure persistent_id is part of both features and labels

persistent_id = dating_training_strat["persistent_id"]

# Add persistent_id to both features and label

dating_training_features['persistent_id'] = persistent_id

dating_training_label = pd.concat([persistent_id, dating_training_label], axis=1) # Add persistent_id to labels

# Print the first few rows of the features and labels to verify

print("Features (first 5 rows):\n", dating_training_features.head())

print("\nLabels (first 5 rows):\n", dating_training_label.head())

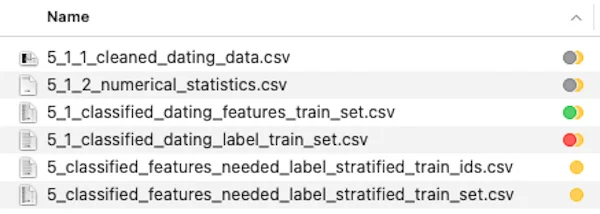

# Define file paths to save the features and label

features_path = os.path.join(DATING_PATH, "5_classified_dating_features_train_set.csv")

label_path = os.path.join(DATING_PATH, "5_classified_dating_label_train_set.csv")

# Save the features and label as separate CSV files

dating_training_features.to_csv(features_path, index=False)

dating_training_label.to_csv(label_path, index=False)

print(f"Features saved to: {features_path}")

print(f"Labels saved to: {label_path}")In this step, we load the dataset, split it into features and the label (mating_success), and add persistent_id to both.

The features and labels are then saved into separate CSV files for future use.

Once the data is loaded, we must clean and scale the numerical features.

This involves several tasks, such as imputing missing values and standardizing features.

Imputing missing values is necessary to fill in the missing data with a reasonable estimate and avoid losing valuable information on other features.

We use standardization to scale numerical features to have a mean of 0 and a standard deviation of 1, ensuring features contribute equally to models and so that no transformation will not bound values to a specific range, unlike min-max scaling.

We'll manually apply one-hot encoding for categorical variables to convert them into numerical representations.

One-hot encoding converts categorical variables into binary columns to make them suitable for machine-learning algorithms.

./chapter2/data2/data_cleaning3/simple3.py

import os

import pandas as pd

from sklearn.preprocessing import StandardScaler

# Define the path to the dataset

DATING_PATH = "./chapter2/datasets/dating/copies4"

def load_dating_data(dating_path=DATING_PATH):

"""

Load the dating data from a CSV file.

"""

csv_path = os.path.join(dating_path, "5_1_classified_dating_features_train_set.csv")

return pd.read_csv(csv_path)

# Load the training dataset

dating_data = load_dating_data()

# -------------------

# Cleaning Numeric Fields

# -------------------

# Initialize StandardScaler

scaler = StandardScaler()

# Define statistics to store for all numerical columns

numeric_columns_stats = []

# Persistent ID: Do nothing

# Age: Do nothing

# Height: Impute missing values with mean

dating_data['height'].fillna(dating_data['height'].mean(), inplace=True)

# dating_data['age'].fillna(dating_data['age'].mean(), inplace=True)

# Income: Replace -1 with mean

income_mean = dating_data['income'][dating_data['income'] != -1].mean()

dating_data['income'] = dating_data['income'].replace(-1, income_mean)

# List of numerical columns to scale

numeric_columns = ['height', 'income', 'age']

for col in numeric_columns:

# For each numerical column, compute the statistics and standardize the column

col_mean = dating_data[col].mean()

col_std = dating_data[col].std()

# Standardize the column (Z-score standardization)

dating_data[f'scaled_{col}'] = scaler.fit_transform(dating_data[[col]])

# Save the statistics

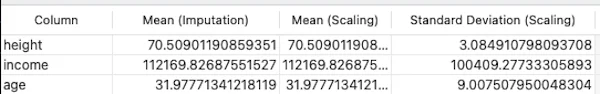

numeric_columns_stats.append({

'Column': col,

'Mean (Imputation)': dating_data[col].mean(), # Mean used for imputation

'Mean (Scaling)': col_mean, # Mean used for standardization

'Standard Deviation (Scaling)': col_std # Standard deviation used for standardization

})

# -------------------------

# Cleaning Categorical Fields

# -------------------------

# 1. Drop 'orientation' and 'sex'

dating_data.drop(['orientation', 'sex'], axis=1, inplace=True)

# 2. Clean 'classified_ethnicity': Drop 'not declared' rows

dating_data = dating_data[dating_data['classified_ethnicity'] != 'not declared']

# Manually one-hot encode 'classified_ethnicity'

ethnicity_categories = dating_data['classified_ethnicity'].unique()

for category in ethnicity_categories:

dating_data[f'ethnicity_{category}'] = (dating_data['classified_ethnicity'] == category).astype(int)

# Drop original 'classified_ethnicity' column

dating_data.drop(['classified_ethnicity'], axis=1, inplace=True)

# 3. Clean 'classified_body_type': Fill missing values with 'average'

dating_data['classified_body_type'].fillna('average', inplace=True)

# Manually one-hot encode 'classified_body_type'

body_type_categories = dating_data['classified_body_type'].unique()

for category in body_type_categories:

dating_data[f'body_type_{category}'] = (dating_data['classified_body_type'] == category).astype(int)

# Drop original 'classified_body_type' column

dating_data.drop(['classified_body_type'], axis=1, inplace=True)

# 4. Clean 'classified_drinks': Fill missing values with 'yes'

dating_data['classified_drinks'].fillna('yes', inplace=True)

# Manually one-hot encode 'classified_drinks'

drinks_categories = dating_data['classified_drinks'].unique()

for category in drinks_categories:

dating_data[f'drinks_{category}'] = (dating_data['classified_drinks'] == category).astype(int)

# Drop original 'classified_drinks' column

dating_data.drop(['classified_drinks'], axis=1, inplace=True)

# 5. Clean 'classified_smokes': Fill missing values with 'No'

dating_data['classified_smokes'].fillna('No', inplace=True)

# Manually one-hot encode 'classified_smokes'

smokes_categories = dating_data['classified_smokes'].unique()

for category in smokes_categories:

dating_data[f'smokes_{category}'] = (dating_data['classified_smokes'] == category).astype(int)

# Drop original 'classified_smokes' column

dating_data.drop(['classified_smokes'], axis=1, inplace=True)

# 6. Clean 'classified_drugs': Fill missing values with 'No'

dating_data['classified_drugs'].fillna('No', inplace=True)

# Manually one-hot encode 'classified_drugs'

drugs_categories = dating_data['classified_drugs'].unique()

for category in drugs_categories:

dating_data[f'drugs_{category}'] = (dating_data['classified_drugs'] == category).astype(int)

# Drop original 'classified_drugs' column

dating_data.drop(['classified_drugs'], axis=1, inplace=True)

# 7. Clean 'classified_religion': Fill missing values with 'not declared'

dating_data['classified_religion'].fillna('not declared', inplace=True)

# Manually one-hot encode 'classified_religion'

religion_categories = dating_data['classified_religion'].unique()

for category in religion_categories:

dating_data[f'religion_{category}'] = (dating_data['classified_religion'] == category).astype(int)

# Drop original 'classified_religion' column

dating_data.drop(['classified_religion'], axis=1, inplace=True)

# 8. Clean 'classified_speaks': Fill missing values with 'english'

dating_data['classified_speaks'].fillna('english', inplace=True)

# Manually one-hot encode 'classified_speaks'

speaks_categories = dating_data['classified_speaks'].unique()

for category in speaks_categories:

dating_data[f'speaks_{category}'] = (dating_data['classified_speaks'] == category).astype(int)

# Drop original 'classified_speaks' column

dating_data.drop(['classified_speaks'], axis=1, inplace=True)

# -----------------------------------

# Save the cleaned dataset as a CSV

# -----------------------------------

output_path = os.path.join(DATING_PATH, "5_1_1_cleaned_dating_data.csv")

dating_data.to_csv(output_path, index=False)

print(f"Cleaned dataset saved at: {output_path}")

# -------------------------------

# Save the statistical values CSVs

# -------------------------------

# Convert the statistics list to a DataFrame

stats_df = pd.DataFrame(numeric_columns_stats)

# Save all statistics in a single CSV file

stats_output_path = os.path.join(DATING_PATH, "5_1_2_numerical_statistics.csv")

stats_df.to_csv(stats_output_path, index=False)

print(f"All statistics saved at: {stats_output_path}")The cleaned dataset is then saved for later use.

Data preprocessing involves multiple steps, including handling missing data, scaling numerical features, encoding categorical variables, and verifying transformations.

Following these steps ensures that our dataset is clean, consistent, and ready for modelling.

The methods demonstrated in this blog offer a systematic approach to transforming raw data into a well-prepared dataset for machine learning tasks.

After preprocessing, it's important to ensure the transformations are applied correctly.

One way to do this is by inspecting individual rows, especially using unique identifiers like persistent_id to track and compare original versus preprocessed data.

Here, we allow the user to input a persistent_id and then compare the corresponding row in the original and preprocessed datasets.

This is a quality check to ensure that all transformations were applied correctly.

./chapter2/data2/comparator8.py

import os

import pandas as pd

# Define the path where the dataset is located

DATING_PATH = os.path.join("datasets", "dating/copies4/")

def load_dating_data(dating_path=DATING_PATH, file_name=None):

"""Load the data from a CSV file."""

csv_path = os.path.join(dating_path, file_name)

return pd.read_csv(csv_path)

# Load the original and preprocessed data

dating_data_original = load_dating_data(file_name="5_1_classified_dating_features_train_set.csv")

# dating_data_preprocessed = load_dating_data(file_name="5_1_3_preprocessed_dating_features.csv")

dating_data_preprocessed = load_dating_data(file_name="cleaned_dating_data.csv")

# Prompt user for a persistent_id

persistent_id = input("Enter a persistent_id to search: ")

# Check if the persistent_id exists in the preprocessed dataset

if persistent_id in dating_data_preprocessed['persistent_id'].values:

# Find the row in both datasets for the given persistent_id

original_row = dating_data_original[dating_data_original['persistent_id'] == persistent_id]

preprocessed_row = dating_data_preprocessed[dating_data_preprocessed['persistent_id'] == persistent_id]

# Print the results for the user in a readable format

print(f"\nOriginal Data for persistent_id: {persistent_id}")

print("\nFields and values in original data:")

for col, val in original_row.iloc[0].items(): # Iterate through the columns and values

print(f"{col}: {val}")

print("\nPreprocessed Data for persistent_id: {persistent_id}")

print("\nFields and values in preprocessed data:")

for col, val in preprocessed_row.iloc[0].items(): # Iterate through the columns and values

print(f"{col}: {val}")

else:

# Notify the user that the persistent_id was not found

print(f"\nError: persistent_id {persistent_id} does not exist in the preprocessed dataset.")

Note: All important parameters used to compute the cleaned dataset are saved in 5_1_2_numerical_statistics.cvs